RAG-TA: RAG-based Intelligent Teaching Assistant System The RAG Intelligent Teaching Assistant System is an intelligent teaching assistance platform based on Multimodal Retrieval-Augmented Generation (Multimodal RAG), specifically designed for educational scenarios. The system integrates the following core functions: 🤖 Intelligent Question Answering System Multimodal Understanding: Supports complex question answering with text and images, capable of understanding charts, formulas, and image content in course materials. Contextual Retrieval: Precise semantic retrieval based on the ChromaDB vector database. Hybrid Retrieval: Combines dense vector retrieval and sparse retrieval (BM25) to improve retrieval accuracy. Source Tracing: Automatically annotates the source file and page number of the answer, ensuring information traceability. 📚 Knowledge Base Management Multi-format Support: Supports various formats including PDF, PPTX, DOCX, TXT, MD, and images. Intelligent Indexing: Automatically extracts text and image content to build a multimodal vector index. Incremental Updates: Intelligently detects file changes and updates only the changed parts, improving efficiency. Folder Management: Supports hierarchical directory structure for easy organization of course materials. 💬 Conversation Management History: Complete conversation history saving and management. Multiple Answer Options: Supports multiple answer versions for the same question, allowing users to switch between them. Folder Classification: Supports conversation folder management for easy course classification. Thinking Mode: Visualizes the AI reasoning process to help understand the answer logic. 🖼️ Multimodal Interaction Image Understanding: Automatically extracts and describes image content in PDFs/PPTXs. Real-time Upload: Supports users uploading images and documents for instant question answering. Visual Question Answering: Provides comprehensive answers combining image content and text knowledge.

Dec 12, 2025

✍️ In recent months, I have done some interesting projects, including ViT on CIFAR-10, Simple ML, ASR, etc.

Jul 1, 2025

I dove into the world of Automatic Speech Recognition (ASR) by building a Large Vocabulary Continuous Speech Recognition (LVCSR) system using the Kaldi toolkit.

Jun 25, 2025

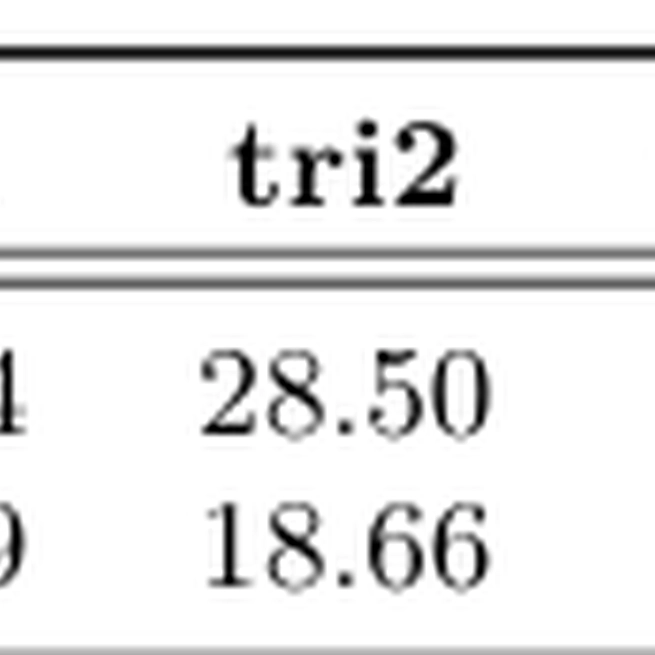

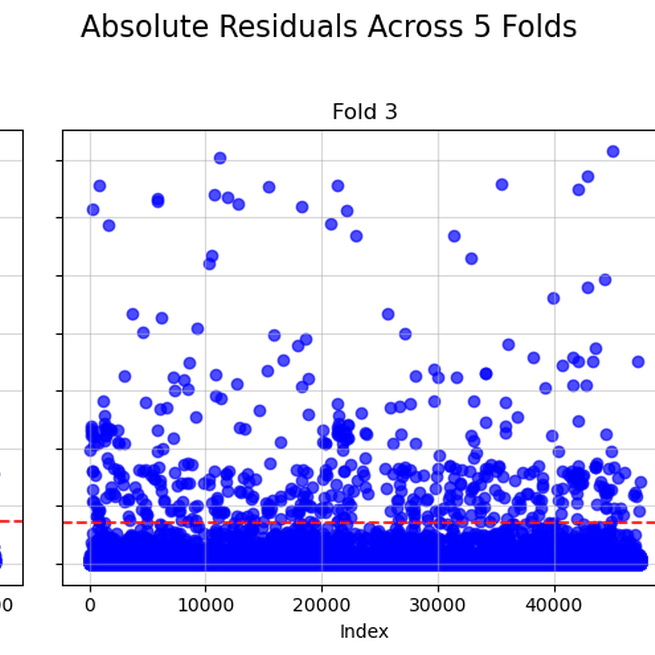

TripDataset Machine Learning Project This project is a complete implementation of machine learning pipelines applied to the TripDataset, focusing on data preprocessing, classification, and regression tasks, including: 🧹 Data preprocessing and cleaning (handling missing values, outlier detection, normalization, and feature engineering) 🤖 Model training for classification and regression (various ML algorithms for categorical and continuous prediction tasks) 📊 Performance evaluation and metrics (accuracy, F1-score, RMSE, and other evaluation techniques) 🔍 Exploratory data analysis and visualization (insightful plots for feature relationships, distribution, and model performance)

Jun 7, 2025

This project is a complete implementation of Vision Transformer (ViT) applied to small-scale datasets (especially CIFAR-10), including extensive exploration.

May 31, 2025

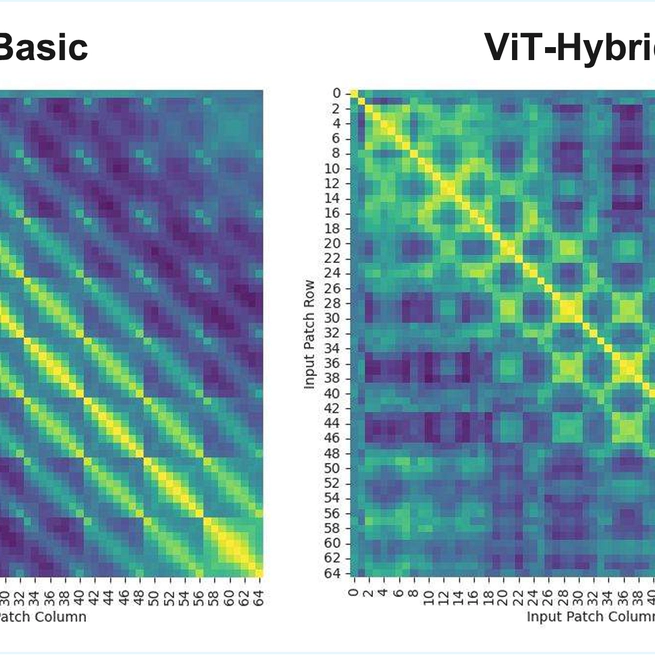

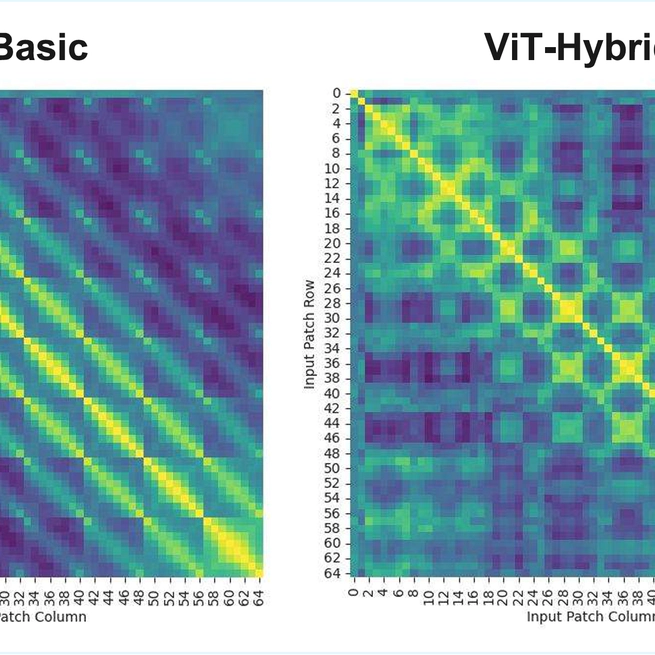

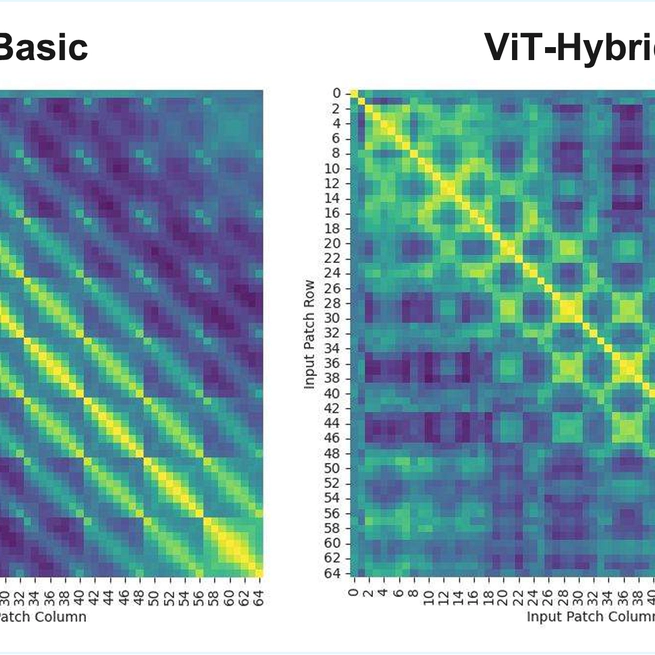

ViT-torch: Vision Transformer on CIFAR-10 (PyTorch) This project is a complete implementation of Vision Transformer (ViT) applied to small-scale datasets (especially CIFAR-10), including: 🎯 Model implementations with various configurations (native ViT, ResNet+ViT hybrid, different patch/heads/blocks setups, Stochastic Depth/DropPath, etc.) 🌹 Training and evaluation scripts (with learning rate schedulers: Warmup/Linear/Cosine/Constant-Cosine/Warmup-Constant-Cosine) 🧩 Data augmentation (RandomCrop+Paste, MixUp, CutMix, RandAugment, and batch random augmentation) 📈 Visualization and analysis (attention maps, attention distance, gradient rollout, feature maps, positional embedding similarity)

May 31, 2025

I conducted extensive experiments comparing frame division methods and model performances, with rich visualizations.

May 15, 2025

📈 I conducted extensive experiments comparing frame division methods and model performances, with rich visualizations.

May 5, 2025

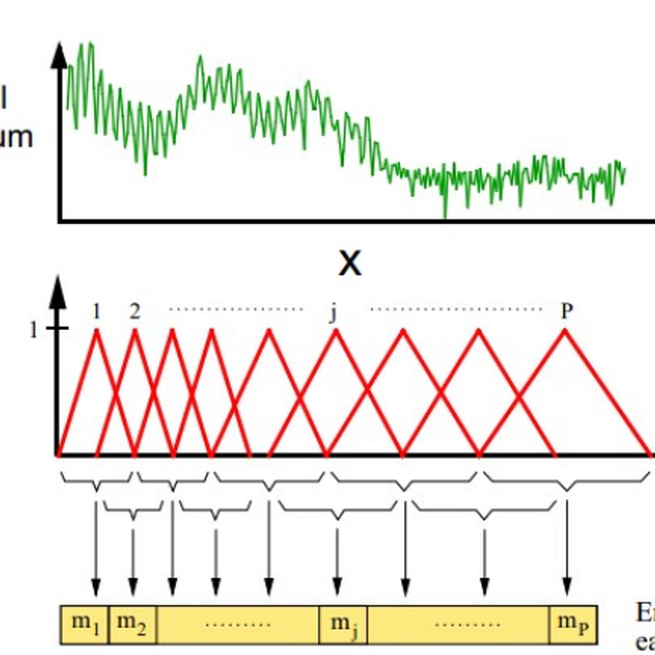

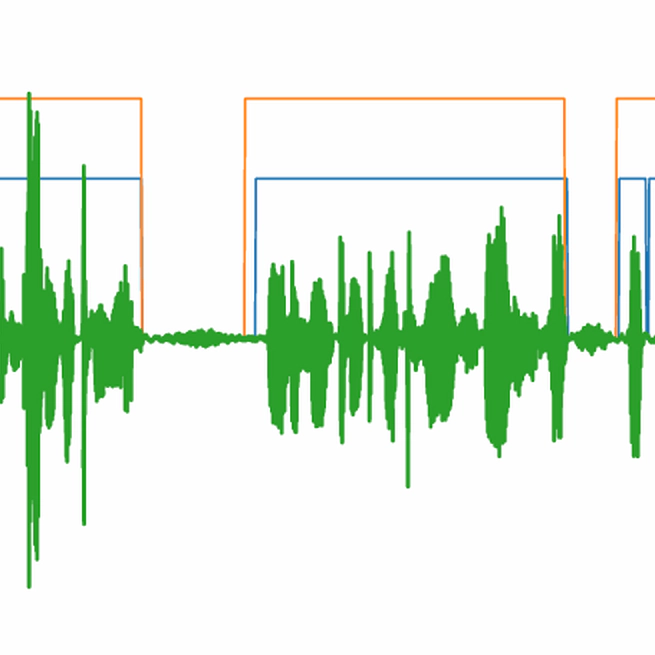

🎯 Voice Activity Detection (VAD), or voice endpoint detection, identifies time segments in an audio signal containing speech. This is a critical preprocessing step for automatic speech recognition (ASR) and voice wake-up systems. This project lays the groundwork for my upcoming ASR project 🤭. 📈 Workflow Overview: The VAD pipeline processes a speech signal as follows:Preprocessing, Framing, Windowing, Feature Extraction, Binary Classification, Time-Domain Restoration 🍻 Project Highlights: I conducted extensive experiments comparing frame division methods (frame length and shift) and model performances, with rich visualizations. For details, see the report in ‘vad/latex/’. If you’re interested in voice technologies, let’s connect! 🔗 For more details, please visit my blog VAD

May 4, 2025

Establishment and solution of mathematical optimization model This project is a lab of the course “Linear Optimization and Convex Optimization”. It discusses a classic optimization problem, the Water filling problem. Please refer to the project description file for details. In this project, I transformed the original problem into a classic optimization problem according to the mathematical derivation in the description file, and implemented two optimization algorithms, the gradient descent method and the Newton method, and proposed a Binary-search algorithm for the original problem. At the same time, I built two linear search modes and did a lot of comparative experiments. Please refer to the report file for details. In this project, I also compared my algorithm with Monkey-search as required. As the saying goes, 1xxxxx monkeys can’t write Shakespeare’s works. I am currently working on model optimization and convergence analysis. If you are interested in this, please come and communicate with me!

Jan 12, 2025