🎉 I have opensourced my VAD project recently

May 5, 2025· ·

1 min read

·

1 min read

Xuankun Yang

GitHub Repo: VAD

GitHub Repo: VADVoice Activity Detection(VAD)

Summary

🎯 Voice Activity Detection (VAD), or voice endpoint detection, identifies time segments in an audio signal containing speech. This is a critical preprocessing step for automatic speech recognition (ASR) and voice wake-up systems. This project lays the groundwork for my upcoming ASR project 🤭.

📈 Workflow Overview: The VAD pipeline processes a speech signal as follows:

- Preprocessing: Apply pre-emphasis to enhance high-frequency components.

- Framing: Segment the signal into overlapping frames with frame-level labels.

- Windowing: Apply window functions to mitigate boundary effects.

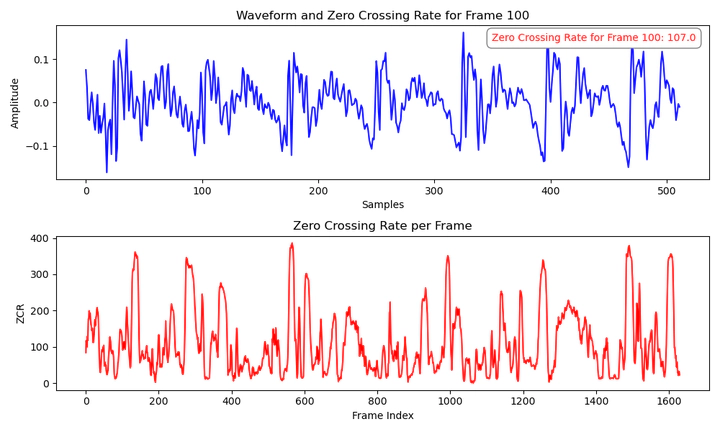

- Feature Extraction: Extract a comprehensive set of features (e.g., short-time energy, zero-crossing rate, MFCCs, and more).

- Binary Classification: Train models (DNN, Logistic Regression, Linear SVM, GMM) to classify frames as speech or non-speech.

- Time-Domain Restoration: Convert frame-level predictions to time-domain speech segments.

🍻 Project Highlights:

I conducted extensive experiments comparing frame division methods (frame length and shift) and model performances, with rich visualizations. For details, see the report in vad/latex/. If you’re interested in voice technologies, let’s connect!